Mind-reading technology – the future of H2M interaction?

In a very early indication of how AI and machine learning technology could one one day transform how we interact with machines, and each other, researchers from the University of California, San Francisco, unveiled an AI system in development that could ‘translate’ brain activity into text.

The technology could eventually help patients who can’t speak or type to communicate. Co-author of the research at California, Dr. Joseph Makin said the work could become “the basis of a speech prosthesis.”

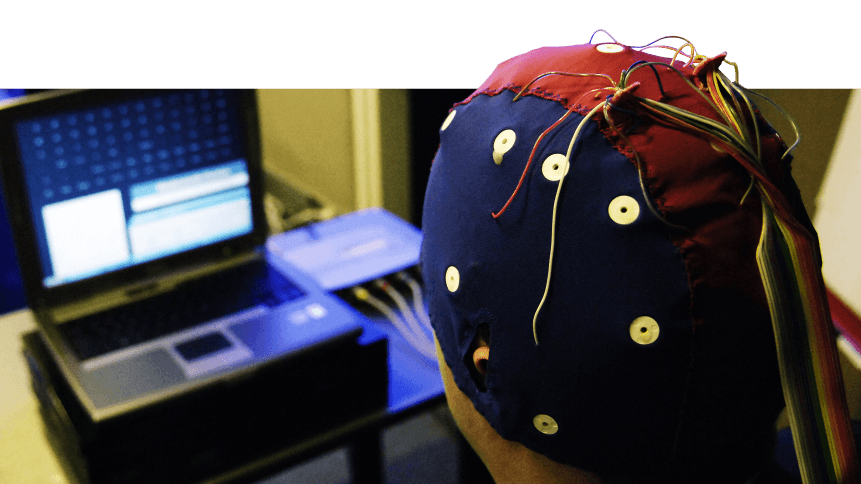

The system was developed thanks to the involvement of four participants with electrode array brain implants to monitor epileptic seizures.

The neural activity of participants was traced as they read sentences out loud. The data is then analyzed by a machine-learning algorithm that turns corresponding brain activity data into a string of numbers.

The system then compares the sound predictions from the brain activity data to real speech recordings. Moving on to a second stage, the system then converts the string of numbers into a sequence of words.

In the beginning, nonsensical sentences were formed. “Those musicians harmonize marvelously”, for example, was decoded to “The spinach was a famous singer”.

In as the system improved, the team was able to garner written text directly form brain activity data. When tested with a vocabulary of up to 300 words, the average error rate was as low as 3 percent.

“We are not there yet,” said Makin, but the system will become more accurate and efficient as it ‘learns’ and ‘matches’ neural waves to human speech.

Human-to-machine interaction

Working off of a small data set, the ‘brainwave to text’ prototype developed at the University of California could lay the groundwork for future methods of human-to-machine interaction.

While healthcare applications underly the current work, similar systems – if they became widely available – could feasibly allow users to control programs with their thoughts alone.

This article, for example, could be written without using a keyboard. or a developer could write lines of code without typing a script.

We can imagine how this could progressively evolve; machinery in a warehouse could be controlled by an operator mentally without any kind of visible interface, while an AR-enabled field of vision could provide them feed of up-to-the-second information.

YOU MIGHT LIKE

Four ways we can use voice assistants in business

However, experts that see similar promise in the system developed at California are also suggest – even at this early stage – regulation should be a consideration

Dr. Mahnaz Arvaneh from Sheffield University told The Guardian that it isn’t too early for stakeholders to begin planning and considering the ethical guidelines.

Even though “we [are still] very, very far away from the point that machines can read our minds,” she said.

‘Mind-reading’ technologies, surprisingly, are not actually new and have started since the last decade.

In 2017, Facebook’s F8 — an annual two-day event where developers take the stage and share their ideas on the future of technologies — announced that the company is working on “a system that will let people type with their brains.”

At the time, the press release stated the social media giant had a goal of “creating a silent speech system capable of typing 100 words per minute straight” from brain activity.

Highlighting that this is five times the speed of an average person typing on mobile, the company claimed it could revolutionize the way we communicate with our devices, friends, colleagues, and communities.