IT pros think AI regulation is the way forward

Conversations around ethics now come part and parcel with artificial intelligence (AI) technology.

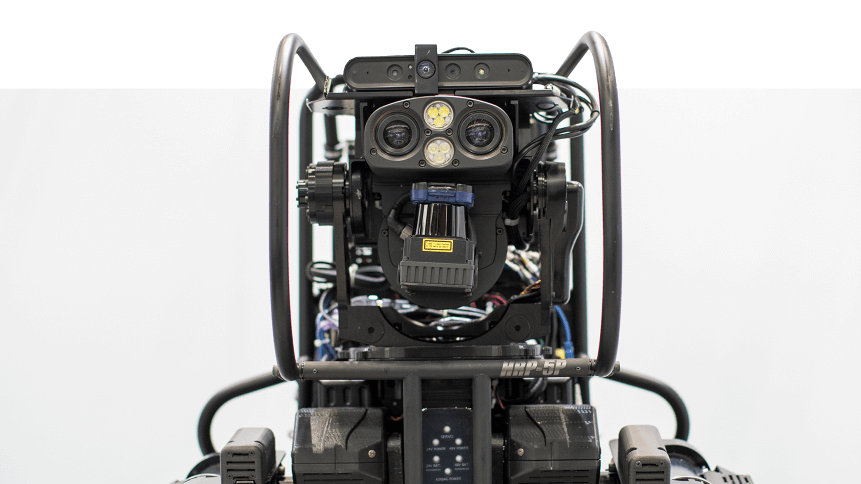

Bad data sets have led to one too many occurrences of AI demonstrating cultural bias, while the technology’s increasing use in military settings— including autonomous weaponry— has been branded, well, pretty inhumane.

Amid numerous other examples, these occurrences contribute to the debate around the development of ethical AI technology. An urgency to ‘do something’ before it’s too late has led to the creation of organizations such as OpenAI, originally founded by Elon Musk, with the aim of fostering the development of AI that benefits greater humanity.

Tech giant Google, meanwhile, recently launched an AI ethics panel, offering guidance on issues relating to AI, automation and emerging tech, and its use in cases such as facial recognition. Although, the panel has since come under fire for appointing an allegedly ‘transphobic’ panel member.

The importance of ethical AI is such that a new study by SnapLogic in partnership with Vanson Bourne revealed that 94 percent of corporate IT decision makers in the UK and US are calling for more attention to responsible and ethical AI development.

But it goes further, in order to enforce the development of ethical AI, a still sizeable majority (87 percent) of IT heads believe AI development should be regulated to ensure it serves the best interests of business, governments, and citizens alike. Of that group, just shy of a third (32 percent) noted that regulation should come from a combination of government and industry, while 25 percent believed it should be the responsibility of an independent industry consortium.

With the pool of respondents representing a range of industries, however, it was found that some sectors are more open to the heavy-hand of regulation than others.

Almost a fifth (18 percent) of IT leads in manufacturing were against the regulation of AI, followed by 13 percent in both the technology sector and retail, distribution, and transport sector.

Those against regulation believed it could put the brakes on innovation, and that development should be at the discretion of the programs’ creators.

“Data quality, security and privacy concerns are real, and the regulation debate will continue. But AI runs on data — it requires continuous, ready access to large volumes of data that flows freely between disparate systems to effectively train and execute the AI system,” said Gaurav Dhillon, CEO at SnapLogic.

“Regulation has its merits and may well be needed, but it should be implemented thoughtfully such that data access and information flow are retained. Absent that, AI systems will be working from incomplete or erroneous data, thwarting the advancement of future AI innovation.”

Who bears responsibility?

Legislation put to one side, the report found that more than half (53 percent) of IT leaders believe “ultimate responsibility” for AI systems being developed ethically and responsibly lies with organizations developing the AI systems themselves, regardless of whether that is a commercial or academic entity.

YOU MIGHT LIKE

AI will attract investors, but only substance pays

Surprisingly, 17 percent said it was the specific individuals working on AI projects that should be held accountable for the ethical robustness of their work. That figure was twice as high in the US (21 percent) as it was the UK (9 percent). A similar number (16 percent) said an independent global consortium, comprised of members of the government, academia, research institutions, and businesses as the only way to establish fair ethical AI rules and protocols.

Some initiatives like this are already in motion, with aims to provide AI, support, guidance, and oversight— the European Commission High-Level Expert Group on Artificial Intelligence being one such example. These kinds of groups were seen as positive for members of the survey, with half of the respondents believing organizations would take guidance and adhere to recommendations from expert groups like this as they develop their systems.

Fifty-five percent, meanwhile, believed organizations would help foster better collaboration between companies developing AI. Brits were (characteristically) more skeptical though, with 15 percent believing AI developers would “push the limits” in regard to expert groups’ advice, compared to just nine percent of Americans.

Furthermore, five percent of UK IT heads indicated that guidance or advice from oversight groups would be effectively useless to drive ethical AI development unless it becomes enforceable by law.

Gaurav Dhillon, CEO at SnapLogic, commented: “AI is the future, and it’s already having a significant impact on business and society,” said Dhillon.

However, as with many fast-moving developments of this magnitude, there is the potential for it to be appropriated for immoral, malicious or simply unintended purposes. We should all want AI innovation to flourish, but we must manage the potential risks and do our part to ensure AI advances in a responsible way.”